You are the administrator of a database that contains 64 lookup tables. These tables store static data that should not change. However, users report that some of this data is being changed. You need to prevent users from modifying the data.

You want to minimize changes to your security model and to your database applications. How should you modify the database?

A.Create a filegroup named LOOKUP. Move the lookup tables to this filegroup. Select the read only check box for the filegroup.

B.Create a database role named datamodifier. Grant SELECT permissions to the datamodifier role. Add all users to the role.

C.Deny INSERT, UPDATE, and DELETE permissions for all users. Create stored procedures that modify data in all tables except lookup tables. Require users to modify data through these stored procedures.

D.Create a view of the lookup tables. Use the view to allow users access to the lookup tables.

第1题:

Your network contains an Active Directory domain. The domain contains a member server that runs Windows Server 2008 R2.You have a folder named Data that is located on the C drive. The folder has the default NTFS permissions configured.A support technician shares C:\Data by using the File Sharing Wizard and specifies the default settings.Users report that they cannot access the shared folder.You need to ensure that all domain users can access the share.What should you do?()

A. Enable access-based enumeration (ABE) on the share.

B. Assign the Read NTFS permission to the Domain Users group.

C. From the Network and Sharing Center, enable public folder sharing.

D. From the File Sharing Wizard, configure the Read permission level for the Domain Users group.

第2题:

You are the administrator of a SQL Server 2000 computer in your company's personnel department. Employee data is stored in a SQL Server 2000 database. A portion of the database schema is shown in the exhibit.

You want to create a text file that lists these data columns in the following format title, FirstName, LastName, WorkPhone, PositionName, DepartmentName.

You want to create the text file as quickly as possible. You do not expect to re-create this file, and you want to avoid creating new database objects if possible.

What should you do?

A.Use the bcp utility to export data from each table to a separate text file. Use format files to select the appropriate columns. Merge the data from each text file into a single text file.

B.Create a view that joins data from all three tables include only the columns you want to appear in the text file. Use the bcp utility to export data from the view.

C.Create a SELECT query that joins the data from the appropriate columns in the three tables. Add an INTO clause to the query to create a local temporary table. Use the bcp utility to export data from the local temporary table to a text file.

D.Create a SELECT query that joins the data from the appropriate columns in the three tables. Add an INTO clause to the query to create a global temporary table. Use the bcp utility to export data from the global temporary table to a text file.

第3题:

You are the database administrator for a retail company. The company owns 270 stores. Every month, each store submits approximately 2,000 sales records, which are loaded into a SQL Server 2000 database at the corporate headquarters.

A Data Transformation Services (DTS) package transforms the sales records, as they are loaded. The package writes the transformed sales records to the Sales table, which has a column for integer primary key values. The IDENTITY property automatically assigns a key value to each transformed sales record.

After loading this month's sales data, you discover that a portion of the data contains errors. You stop loading data, identify the problem records, and delete those records from the database.

You want to reuse the key values that were assigned to the records that you deleted. You want to assign the deleted key values to the next sales records you load. You also want to disrupt users' work as little as possible.

What should you do?

A.Export all records from the Sales table to a temporary table. Truncate the Sales table, and then reload the records from the temporary table.

B.Export all records from the Sales table to a text file. Drop the Sales table, and then reload the records from the text file.

C.Use the DBCC CHECKIDENT statement to reseed the Sales table's IDENTITY property.

D.Set the Sales table's IDENTITY_INSERT property to ON. Add new sales records that have the desired key values.

第4题:

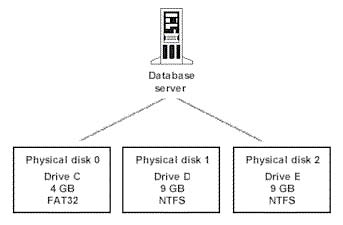

You are the administrator of a SQL Server 2000 computer. The server is configured as shown in the Database Server Configuration exhibit.

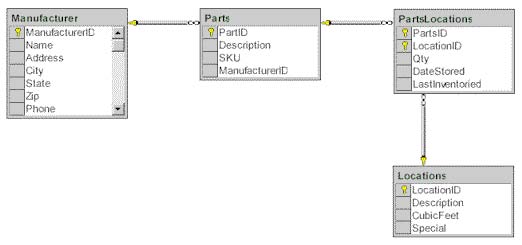

You need to create a new database named Inventory. Employees in your company will use the Inventory database to track inventory data. Users will require immediate responses to queries that help them locate where parts are stored. The tables in the database will be configured as shown in the Database Schema exhibit.

The database will consume 14 GB of disk space. You must configure the data files and transaction log to accelerate query response time.

Which two courses of action should you take? (Each correct answer represents part of the solution. Choose two.)

A. On drive C, create a transaction log.

On drive D, create a data file in the PRIMARY filegroup.

On drive E, create a data file in the SECONDARY filegroup.

B. On each drive, create a transaction log.

On each drive, create a data file in the PRIMARY filegroup.

C. On drive D, create a transaction log.

On drive E, create a data file in the PRIMARY filegroup.

D. On the PRIMARY filegroup, create all tables and all indexes.

E. On the PRIMARY filegroup, create all tables. On the SECONDARY filegroup, create all indexes.

F. On the PRIMARY filegroup, create the Parts table and its indexes.

On the SECONDARY filegroup, create all other tables and their indexes.

第5题:

You are the administrator of a SQL Server computer. Users report that the database times out when they attempt to modify data. You use the Current Activity window to examine locks held in the database as shown in the following screenshot.

You need to discover why users cannot modify data in the database, but you do not want to disrupt normal database activities. What should you do?

A.Use the spid 52 icon in the Current Activity window to discover which SQL statement is being executed

B.Use the sp_who stored procedure to discover who is logged in as spid 52

C.Use SQL Profiler to capture the activity of the user who is logged in as spid 52

D.Use System Monitor to log the locks that are granted in the database

第6题:

You are the administrator of a Windows 2000 network. The network includes a Windows 2000 Server computer that is used as a file server. More than 800 of Ezonexam.com's client computers are connected to this server.

A shared folder named Data on the server is on an NTFS partition. The data folder contains more than 200 files. The permissions for the data folder are shown in the following table.

Type of permission Account Permission

Share Users Change

NTFS Users Full Control

You discover that users are connected to the Data folder. You have an immediate need to prevent 10 of the files in the Data folder from being modified. You want your actions to have the smallest possible effects on the users who are using other files on the server.

What two actions should you take? (Choose Two)

A.Modify the NTFS permissions for the 10 files.

B.Modify the NTFS permissions for the Data folder.

C.Modify the shared permissions for the Data folder.

D.Log off the users from the network.

E.Disconnect the users from the Data folder.

第7题:

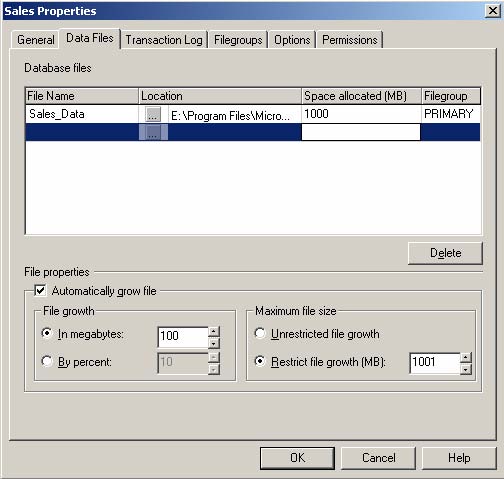

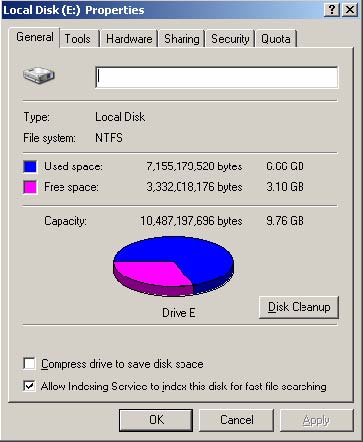

You are the administrator of a SQL Server 2000 computer. The server contains database named Sales. Users report that they cannot add new data to the database. You examine the properties of the database. The database is configured as shown in the Sales Properties exhibit.

You examine drive E. The hard disk is configured as shown in the Local Disk Properties exhibit.

You want the users to be able to add data, and you want to minimize administrative overhead. What should you do?

A.Increase the maximum file size of Sales_Data to 1,500MB.

B.Use the DBCC SHRINKDATABASE statement.

C.Set automatic file growth to 10 percent.

D.Create another data file named Sales_Data2 in a new SECONDARY filegroup.

第8题:

You are the network administrator for your company. The network contains a Windows Server 2003 computernamed Server1.Server1 contains two NTFS volumes named Data and Userfiles. The volumes are located on separate hard disks.The Data volume is allocated the drive letter D. The Data volume is shared as \\Server1\Data. The Userfiles volume is mounted on the Data volume as a volume mount point. The Userfiles volume is displayed as the D:\Userfiles folder when you view the local disk drives by using Windows Explorer on Server1. The D:\Userfiles folder is shared as \\Server1\Userfiles.The files on the Userfiles volume change every day. Users frequently ask you to provide them with previous versions of files. You enable and configure Shadow Copies on the Data volume. You schedule shadow copies to be created once a day. Users report that they cannot recover previous versions of files in the \\Server1\Userfiles shared folder.You need to enable users to recover previous versions of files on the Userfiles volume.What should you do? ()

第9题:

You are the administrator of a SQL Server 2000 computer. The server contains a database that stores financial data. You want to use Data Transformation Services packages to import numeric data from other SQL server computers. The precision and scale values of this data are not defined consistently on the other servers.

You want to prevent any loss of data during the import operations. What should you do?

A.Use the ALTER COLUMN clause of the ALTER TABLE statement to change data types in the source tables. Change the data types so that they will use the lowest precision and scale values of the data that will be transferred.

B.Use the ALTER COLUMN clause of the ALTER TABLE statement to change data types in the destination tables. Change the data types to reflect the highest precision and scale values involved in data transfer.

C.Set a flag on each DTS transformation to require an exact match between source and destination columns.

D.Set the maximum error count for each DTS transformation task equal to the number of rows of data you are importing. Use an exception file to store any rows of data that generate errors.

E.Write Microsoft ActiveX script. for each DTS transformation. Use the script. to recast data types to the destinations precision and scale values.

第10题:

You are the network administrator for The network contains a Windows Server 2003 computer named TestKing7.

TestKing7 contains two NTFS volumes named Data and TestKingFiles. The

volumes are located on separate hard disks. The Data volume is allocated the drive

letter D. The Data volume is shared as \\TestKing7\Data. The TestKingfiles volume

is mounted on the Data volume as volume mount point. The TestKingFiles volume is

displayed as the D:\TestKingFiles folder when you view the local disk drives by

using Windows Explorer on TestKing7. The D:\TestKingfiles folders is shared as

\\TestKing7\TestKingfiles

The files on the TestKingFiles volume change every day. Users frequently ask you to

provide them with previous versions of files. You enable and configure Shadow

Copies of the Data volume. You schedule shadow copies to be created once a day.

Users report that they cannot recover previous versions of the files on the

TestKingFiles volume.

What should you do?()